14. 03. 2007 14:03 | 09_EPFL

User-driven distributed control of collective assembly using mobile, networked miniature robots

Following the "Rolling microfunctions" workshop between ECAL, fabric | ch and SWIS-EPFL laboratory about swarm networked robotics, Dr Julien Nembrini (in charge of the project for Swiss Federal Institute of Technology) made the following post on SWIS laboratory research webpages:

------------------------

-

"User-driven distributed control of collective assembly using mobile, networked miniature robots

If swarm systems are to be used in human environments, a protocol for human-swarm interaction has to be defined to enable direct and intuitive control by users. Up to now research has concentrated on the difficult problem of controlling the swarm, whereas the interaction problem has been largely overlooked.

-

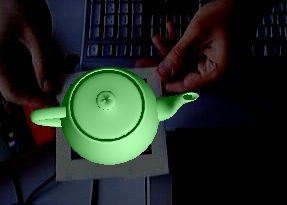

In collaboration with interaction design researchers, the project developed a demonstrator consisting in a fleet of mobile "lighting" robots moving on a large table, such that the swarm of robots form a "distributed table light". In the presence of human users, the group of robots quickly aggregates to form together a lamp whose shape and function depends on the users' positions and behaviors.

-

The whole set-up consists in a collection of small robots moving and interacting on a table, a camera position tracker and a human-computer interface. The swarm-user interaction is designed as follows: the target configuration of the aggregate is controlled through crude position and attitude tracking of users around the table. User tracking and robot tracking are integrated in software and configuration information as well as positioning information are then sent to the robots.

-

Considering the specific task of ordered aggregation as benchmark, the project studies a simple algorithm able to control the geometry of an aggregate consisting of embedded, real-time, self-locomoted robotic units endowed with limited computational and communication capabilities. The robots only use infrared proximity sensors and wireless communication.

-

In this case, the aggregation problem being human centered, time-to-completion becomes critical. The user cannot accept to wait too long for the robots to aggregate. The system has to react quickly to changes in users attitude. This is the reason for defining a hybrid algorithm where global positionnal information is sent to the robots, which then accordingly choose their actions in an autonomous manner.

The project results from a collaboration with designers from Ecole Cantonale d'Art de Lausanne"

Posted by patrick keller at 14:03

EPFL - SWIS - Swarm-Intelligent Systems Group

The Swarm-Intelligent Systems Group of the EPFL (Swiss Federal Institute of Technology), Prof. Alcherio Martinoli and Post-doctorate assistant Julien Nembrini have joined the Variable environment/ project and worked around Workshop#4 between June and December 2006.

Prof. Martinoli's laboratory belongs to the School of Computer and Communication Sciences, Institute of Communication Systems of the EPFL.

-

SWIS laboratory is a member of the Mobile Information & Communication Systems, a National Center of Competences in Research.

-

The area of interest of the laboratory is in swarm-intelligent collective robotics.

Within the context of this collaboration, we will work with the E-PUCK (see img below) platform. The goal is to develope a human - swarm bots collaboration with a minimal spatial & lighting function for the bots.

-

Posted by patrick keller at 15:10

Particle filter-based camera tracker fusing marker and feature point cues

The paper entitled:

-

Particle filter-based camera tracker fusing marker and feature point cues

-

has been accepted for oral presentation in the IS&T/SPIE Electronic Imaging Symposium 2007. This article is part of the Proceedings of the Visual Communications and Image Processing conference.

-

Download pdf.

This paper presents a video-based camera tracker that combines marker-based and feature point-based cues within a particle filter framework. The framework relies on their complementary

performances. On the one hand, marker-based trackers can robustly recover camera position and orientation when a reference (marker) is available but fail once the reference becomes unavailable. On the other hand, filter-based camera trackers using feature point cues can still provide predicted estimates given the previous state. However, the trackers tend to drift and usually fail to recover when the reference reappears. Therefore, we propose a fusion where the estimate of the filter is updated from the individual measurements of each cue. More precisely, the marker-based cue is selected when the reference is available whereas the feature point-based cue is selected otherwise. Evaluations on real cases show that the fusion of the two approaches outperforms the individual tracking results.

Posted by david.marimon at 16:37

CV Lab - EPFL / Softwares

-

Pascal Fua's CV Lab from EPFL has released some free softwares for camera calibration in the context of pattern regognition (markers) for AR Application. Could be interesting to merge these functionalities with our planed and open source XjARToolkit in the future.

-

Softare download area on the CV Lab's web pages

Posted by patrick keller at 17:23

Fusion of marker-based tracking and particle-filter based camera tracking

We have developed a novel a video-based tracker that combines a marker-based tracker and a particle filter-based camera tracker. The framework relies on their complementary performance. We propose a fusion where the overall estimate is selected among the individual estimates. More precisely, the marker-based tracker is selected when the reference is available whereas the particle filter-based camera tracker is selected otherwise.

Some snapshots of the performance of the fusion tracker in front of:

Occlusions

Marker partially out of the field of view

Video input with little illumination

Video input with saturated illumination

David Marimon

Signal Processing Institute

Posted by david.marimon at 11:08

Diploma Project: Robust Feature Point Extraction and Tracking for Augmented Reality

On Tuesday 20th September, Bruno Palacios (Erasmus student coming from UPC-Barcelona) presented his diploma project entitled Robust Feature Point Extraction and Tracking for Augmented Reality supervised by David Marimon and Prof.T.Ebrahimi. This work enhances the already developed AR system using more robust tracking techniques.

Abstract of the report________

For augmented reality applications, accurate estimation of the camera pose is required. An existing video-based markerless tracking system, developed at the ITS, presents several weaknesses towards this goal. In order to improve the video tracker, several feature point extraction and tracking techniques are compared in this project. The techniques with best performance in the framework of augmented reality are applied to the present system in order to prove system’s enhancement.

A new implementation for feature point extraction, based on Harris detector, has been chosen. It provides better performance than Lindeberg-based former implementation. Tracking implementation has been modified in three ways. Track continuation capabilities have been added to the system with satisfactory results. Moreover, the search region used for feature matching has been modified taking advantage from pose estimation feedback. The two former modifications working together succeed in improving system’s performance. Two alternatives to photometric test, based on Gaussian derivatives and Gabor descriptors, have been implemented showing that they are not suitable for real-time augmented reality. Several improvements are proposed as a future work.

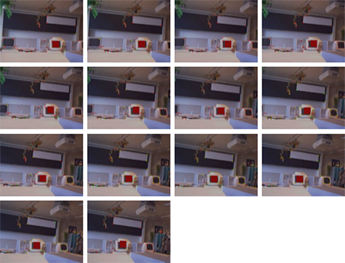

Results_____________

Scene augmented with a virtual red square in front of a real monitor (click on image for bigger resolution).

Posted by david.marimon at 17:38

CALL FOR ATTENDANCE: MOBILE HCI 2005, September 19-22 2005, Salzburg

The 7th International Conference on

Human-Computer Interaction with Mobile Devices and Services

*** Early Registration Deadline: August 5 2005 ***

Conference Chair:

Manfred Tscheligi, ICT&S, University of Salzburg

Organisational Committee:

Regina Bernhaupt, ICT&S, University of Salzburg Kristijan Mihalic, University of Salzburg

www.mobilehci.org

MobileHCI 05 is the 7th conference in the MobileHCI series and will be held at the University of Salzburg, Salzburg, Austria.

The 7th conference in the MobileHCI Series provides a forum for academics and Practitioners to discuss the challenges, potential solutions and innovations towards effective interaction with mobile systems and services. It covers the analysis, design, evaluation and application of human-computer interaction techniques and approaches for all mobile computing devices and services.

Keynote: Marco Susani, Director Advanced Concepts Design Group, Motorola

(US)

The main conference sessions are on:

* Social Communication, Privacy Management, Trust

* Designing Mobile Interaction

* Mulmodal and Multidevice Issues

* Input Techniques and Visualization Approaches

* Various Aspects of Context

* Mobile Guides and Mobile Navigation

* Aspects of Social Mobility

* Finding Facts of Mobile Interaction

* Optimising Mobile Navigation

* Capturing Context

* Evaluating Mobile Content

Together with the following workshops and tutorials:

* AIMS 2005: Artificial Intelligence in Mobile Systems

* Context in mobile HCI

* Enabling and improving the use of mobile e-services

* HCI in Mobile Guides

* MOBILE MAPS 2005: Interactivity and Usability of Map-based Mobile Services

* Designing with the Human Memory in Mind

* Development of Interactive Applications for Mobile Devices

* Handheld Usability: Design, Prototyping, & Usability Testing for Mobile Phones

* How to Set-up a Corporate User Experience Team: Key Success Factors, Strategic Positioning, and Sustainable Organisational Implementations

* Mobile Interaction Design Matt Jones, Gary Marsden

* Data Collection and Analysis Tools for Mobile HCI Studies

In addition the conference contains Posters, Demonstrations and Industrial Case Studies.

The full programme is available at www.mobilehci.org together with other information on the conference, Salzburg, as well as registration.

The conference is sponsored by Nokia and Microsoft Research and supported by ACM SIGCHI and ACM SIGMOBILE.

For further information contact:

Confereence Organizers and Contact:

Manfred Tscheligi (Conference Chair)

Regina Bernhaupt, Kristijan Mihalic (Organisational Committee)

Human-Computer & Usability Unit

ICT&S Center for Advanced Studies and Research in Information and Communication Technologies University of Salzburg Sigmund-Haffner-Gasse 18 5020 Salzburg, Austria

Phone: +43-662-8044-4813

Fax: +43-662-6389-4800

Email: mobilehci@icts.sbg.ac.at

Web: www.mobilehci.org

Posted by david.marimon at 10:45

Announcement: Public Release of ARToolKitPlus

Dear all AR developers,

due to many requests we release our (until now) internal version of ARToolKit, called ARToolKitPlus.

ARToolKitPlus is not a successor to the original ARToolKit, which is still further developed and supported. In fact we can assure that we will not have the capacity to provide a similar extensive support as is currently done for ARToolKit.

ARToolKitPlus could actually be called ARToolKitMinus, since we removed all support for reading cameras and rendering VRML.

What's left is an enhanced version of ARToolKit's vision core (those files that you can find in lib\SRC\AR in the ARToolKit distribution).

Main new features are:

- C++ class-based API (compile-time parametrization using templates)

- 512 id-based markers (no speed penalty due to large number of markers)

- New camera pixel-formats (RGB565, Gray)

- Variable marker border width

- Many speed-ups for low-end devices (fixed-point arithmetic, look-up tables, etc.)

- Tools: pattern-file to TGA converter, pattern-file mirroring, off-line camera callibration, id-marker generator

ARToolKitPlus is known to compile for VS.NET 2003, VC 6.0, eVC 4.0.

The project's webpage can be found at

http://studierstube.org/handheld_ar/artoolkitplus.php where you can find a more detailed overview about the changes, the API documentation, some short code samples and a download link.

PLEASE NOTE: This version of ARToolKit is only targeted to experienced

C++ programmers. It does not read camera images or render geometry of

any kind (especially no VRML support) nor is it an out-of-the box solution. In contrast to the original ARToolKit, this library is not meant for starters in developing AR software. No binary executables (execept for some tools) are provided.

If you have any questions, suggestions or comments please send them to me or the artoolkit mailing list.

bye,

Daniel Wagner

______________________

aqppeared in ARFORUM on the 13th May 05

Posted by david.marimon at 14:36

EPFL - STI - Signal Processing Institute

The Signal Processing Institute of the EPFL (Swiss federal Institute of Technology), Prof. Touradj Ebrahimi and Phd student David Marimon Sanjuan are partners of the Ra&D project Variable environment/.

The Institute belongs to the "Sciences et Techniques de l'ingénieur" faculty.

David Marimon's PHD research is about an EPFL's extension (vision and signal processing) to the open source software AR Toolkit. The software they are working on is therefore dedicated to Augmented Reality.

-

Check out a description about their technology HERE.

Posted by patrick keller at 16:40

New demonstrator version available

New version downloadable from

http://itswww.epfl.ch/~marimon/software.php (Binaries & source code)

with a menu at the beginning to choose the output window type:

320x240

640x480

640x480 fullscreen

Hope you enjoy it!

David

Posted by david.marimon at 12:52

| Comments (1)

10. 06. 2005 10:47 | 09_EPFL

Designer's AR Toolkit

email excerpt:

----------------------------------------------------------------------

To: Discussion on Augmented and Virtual Reality Technology (AR Forum); ARToolkit ARToolKit; director - Shockwave - and Flash Game Production; dart-announce@cc.gatech.edu; Dart Mailing List

Subject: [ARFORUM] Announcing release 2.0 of DART, the Designer's AR Toolkit

We are pleased to announce that a new release of DART (The Designer's Augmented Reality Toolkit) is available for download from our website.

http://www.gvu.gatech.edu/dart

For those unfamiliar with DART, it is a powerful, extensible AR authoring environment built on top of Macromedia Director. DART if available for free; the license (which is meant to be very liberal) is in each of the Director casts. DART is the product of a research project aimed at understanding how a wide variety of people think about creating experiences and systems that mix 3D virtual and physical spaces. So, what we really would like in return is feedback what you think, what works and what doesn't, and what you do with it) and acknowledgment when you publish or distribute things built with DART.

For an overview of DART, please read the DART_Overview.doc available in the download site, or for a more detailed look at the research behind DART, please read our UIST 2004 paper, available at http://www.cc.gatech.edu/ael/papers/dart-uist04.html

(Blair MacIntyre, Maribeth Gandy, Steven Dow, and Jay David Bolter. "DART: A Toolkit for Rapid Design Exploration of Augmented Reality Experiences." Proceedings on conference on User Interface Software and Technology (UIST'04), October 24-27, 2004, Sante Fe, New Mexico).

(If you are going to be at SIGGRAPH, we will be presenting this paper in the "Reprise of UIST and I3D" session, as one of the best papers from UIST last year!)

DART consists of a set of Lingo scripts and an Xtra plug-in that extends Macromedia Director with support for live cameras, marker tracking (using the ARToolkit or ARTag) and various hardware sensors and distributed shared memory (using VRPN). We chose to develop on top of Director as it provides a very full featured development environment with an active developer community and cross platform support (Win and Mac/OSX), something that academic software projects do not often have. All the scripts are open and editable, allowing a developer to easily create new components as needed.

Some Highlights of version 2.0

------------------------------

In addition to significant bug fixes and general improvements throughout the code and Xtra, some other highlights include ...

-Corrected scene hierarchy when loading 3D models. Now the parent-child relationships are preserved.

-Better handling of animations. Now includes support for bones as well as keyframe animations.

-Created a LightActor. Now you can place lights of all types in the scene and control them with a Transform script just like any other 3D Actor.

-Better 3D world cleanup. Fixed problems with 3D elements not being removed from the world when they went out of scope.

-New set of 2D HUD components.

-Improved TextActor that supports actions such as programmatically setting the text, changing font etc.

-New OverlaySketchActor that allows you to display still and animated images on the HUD.

-New OverlayMapActor that creates a 2D overhead map that automatically shows the locations and orientations of actors and cameras in the scene.

-New OverlayProgressBarActor that can be used to show a progress bar on the HUD that can be controlled programmatically.

-Support for creating large numbers of cues and actions.

-MultipleTimeCue lets you define lists of timecues in a textfile.

-MultipleActions allows you to define lists of actions for an actor in a textfile.

-New FlashActor that allows you to texture-map Flash content (even some interactive content) on ObjectActors. This feature will only work under Director MX 2004 and later.

-New ReplayAR behavior that transparently buffers all video and 3D transformations at runtime, giving you the ability to pause and review live video and tracker data when the need arises.

-You can now use LiveVideo (e.g. for marker tracking) without having to display the video in the background. Both Live and Playback video can be flipped horizontally and vertically.

-In LiveVideo, if you don’t specify a data directory the system will use the directory “Data” under the directory containing the Director file.

-System performs sanity checks to see if the DART data directory and camera calibration file are present when using the LiveVideo script, that the OpenGL renderer is being used (required for getting video into the background efficiently), and that the Xtra is present.

-A much more robust way of finding the target video texture in the Xtra.

-New DART-Debug cast that contains all debugging related behaviors.

-New MarkerDebugControls script that allows you to see the ARToolkit thresholded image with corner dots on detected markers while modifying the threshold value.

-Support for ARTag marker tracking (can be used instead of ARToolkit).

Posted by patrick keller at 10:47