« June 2006 |

Main

| August 2006 »

Wireless wonder chip

-

Regarding the problematic of "tagging real space" with digital and dynamic content (see our previous post related to visual markers for handheld camera), the hardware solution is also on its way.

Read it on MIT Technology review.

Posted by patrick keller at 16:51

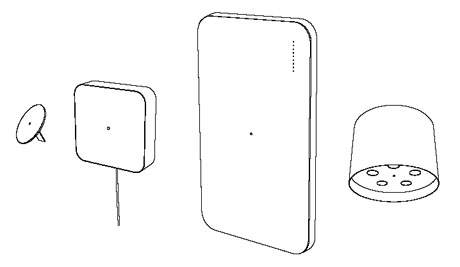

(web)Cameras objects

-

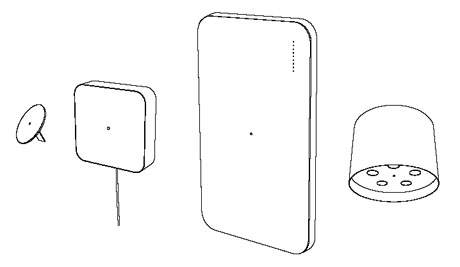

Aude Genton (project's assistant for object design) just sent me some documents/plans about the (web)cam objects. This is a shift in the object's language for the (web)cameras. They don't look technological anymore and have a second "domestic" function. They can be hanged on walls or ceilings easily and bring "computer vision" into homes or offices. They can also become blogjects, skype phone-cameras as well as "AR" or "Spatial configurations" trackers. They are challenging objects as we know that bringing tracking cameras into our own home ask some major questions.

In the actual state, we have 3 mirrors (for walls, tables and hands) where one of them is a computer as well and 1 light (ceiling).

Posted by patrick keller at 12:09

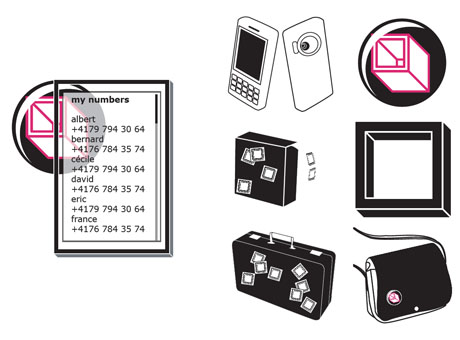

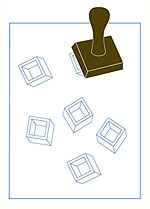

"AR Ready" simple objects based on AR signs/patterns

-

-

Based upon the AR signs & patterns being developed by Tatiana Rihs (project's assistant for graphic design), we will be able to revamp lots of oldtech or paper based products adding to them AR, media, dynamic/networked content & interaction functionalities. They will become "AR ready" products.

We will use our XjARToolkit software for such extended rich media functionalities.

First samples by Tatiana includes wallpapers, t-shirts, papetries, post-its, ink plugs, stickers, posters, badges, fabric, etc.

Posted by patrick keller at 15:43

Video Tracking System of Spatial Configurations

The very first version of the "Video Tracking of Spatial Configuration (VTSC)" system developed by fabric | ch was delivered to Julien Nembrini from the EPFL. The system allows to control an unlimited set of volumes in a given space and allows to detect if a given volume is filled or not. A volume is obtained by a set of different USB webcam's point of views (shooting the same room from distinct locations for example). Intersections of defined zones in these point of views define volumes.

.

The system is working with a set of basic USB webcams. Each USB webcam is controlled by a dedicated application. The application managed 2D zones that must be monitored in the image obtained from its associated webcam: it detects if a zone was activated or not (filled). All these applications are networked (meaning that the controlled volumes can even be in distinct remote locations), all information centralized to a main controller application known as the moderator. The moderator is filtering received information and decides if a volume is activated or not (filled).

.

Within the framework of this project, a volume activation will suggest epfl's e-puck robots to organised themselves in a given configuration.

-

As the system is networked based, it can be deployed in a very convenient way. The number of involved USB webcams, the number of needed computers, the location of these computers can be adapted very easily to any kind of project/configuration. As mentionned previously, it is even possible to combined the monitoring of volumes that are not at the same location in order to control something else in another distinct location. It can also be easily integrated in the Rhizoreality system developed by fabric | ch..

.

A set of tests we have made has raised a set of limitations/observations to take in consideration while deploying a VTSC configuration. We have successfully plugged 4 USB webcams on the same computer (PC laptop, desktop) by using a USB HUB. Of course, application in charge of controlling a given webcam must be installed and runned on the same computer as the webcam (the video stream is not broadcasted). So one basic computer was able to host 4 applications for video image analysis and the moderator without any major frame rate loss.

One must kept in mind some USB limitation linked to cable length (around 10m max.). It should be possible to connect the webcam with a longer cable through the use of USB repetor or USB to RJ45 convertor but these options were not tested or used within the frame of this project.

Of course the more powerful the host computer is, the more it should be possible to connect webcams, keeping in mind that the USB bus has its own limitation in term of bandwidth which should of course restrict the number of camera that can be connected to the same computer without video signal or major frame rate loss.

.

The system is based on JAVA but video signals are accessed through DirectX, so VTSC is condemned to run under Windows. Things can evolve in time, through the change of the webcam's video signal access module.

Posted by fabric | ch at 10:56

Exemple d'utilisation du Tracking Vidéo (VTSC)

Cet exemple est basé sur l'usage d'une table "architecturale" multi-usages de type Joyn (design: R. & E. Bourroulec, éditeur Vitra) de 540x180. Les différentes dimensions des tables de types Joyn nous serviront pour la suite du projet. Il ne s'agit en effet pas de développer des tables dans le cadre de cette collaboration avec le laboratoire SWIS (collaboration dans le cadre du Workshop_04), mais bien essentiellement une "collaboration" entre des robots de petites tailles (e-puck) et des utilisateurs dans un contexte de micro-spatialité.

.

Les robots e-puck de l'EPFL pourraient évoluer à l'intérieur d'une zone délimitée par une ligne noire posée sur la table (parallélépipède rouge sur l'image). La ligne noire doit pour l'instant être présente pour sécuriser l’évolution des robots. Ces derniers dépendent d’un tracking vidéo dédié pour contrôler leurs mouvements sur la table. En cas de panne de ce système, les robots pourraient tout simplement tomber de la table en continuant un mouvement qui n’a pas été invalidé par le système de tracking vidéo dédié. Ces robots sont actuellement partiellement autonomes, mais toutes les recherches sont dirigées pour qu’ils deviennent totalement autonomes (ne plus dépendre d’un système de tracking vidéo externe).

-

Un ensemble de caméras (webcam USB) permettent de contrôler un ensemble de volumes autour de la table mais également sur la table : une chaise est elle occupée, un intervenant a-t-il posé ses coudes sur la table, ou bien juste une main etc… Suivant les volumes occupés ou non cela suggère aux robots certaines activités : ils se déplacent, s’organisent afin de former une configuration de groupe dans le but de « servir » au mieux les intervenants.

.

Les volumes contrôlés par les webcams sont « naturels » en ce sens que leur occupation spatiale découle d’une activité humaine des plus classiques. L’apprentissage du fonctionnement de l’ensemble par l’intervenant est nul et peut reposer uniquement sur l’intuition et la perception de l’espace par ce dernier.

-

Posted by fabric | ch at 10:08

VTSC - Tech. Review

Video tracking systems are usually set up for object motion tracking or change detection. These systems are assumed to be able to run in real-time, e.g. analyzing a live video stream and giving the expected result straight forward without time delay.

The obvious main purpose of such systems are usually linked to video surveillance (persons, vehicles) or even object guidance (missiles).

PFTrack

Optibase

Logiware

A large set of academic (http://citeseer.ist.psu.edu/676131.html) and commercial references exists exploiting a well known set of distinct methods. Usually the best is the algorythm, the worst is its CPU print.

Commercial solution usually proposes very good solution while using dedicated hardware, making possible to have high performance algorythm running in real-time.

In the framework of this project, a set of pre-defined constraints must be taken in account:

-> The tracking system must interact with an existing robot control system developped at the EPFL

-> Low cost hardware may be used for cameras and computers (video streams analysis)

-> Several tracked area activations, issued from several distinct cameras, may be combined to make one decision validated or not

-> The number of cameras must be maximized (in order to obtain a maximum of tracked configurations) where the needed set of computers to perform video analysis must be minimized

This set of constraints excludes the use of any commercial solutions that may have an important costs as well as may imply problems to adapt itself to the describe experiment scope.

It disqualified as well open-source or freely available video analysis systems because of their lack of functionalities: none of the tested projects were able to deal with several cameras connected to the same host computer for example.

Some of them imply the use of a particular type of camera, compatible with some specific drivers only (WDM for JMyron).

By developing a highly networked system based on commonly used technology (Microsoft DirectShow) we will be able to use any windows compatible webcam without any particular limitation. It implies as well to be able to access to several camera video streams through USB from the same host computer.

The network layer will ensure that all video analysis data can be centralized to a dedicated application in charge of validating a given decision (ex: 3 persons are sitting around the table true-false?) as well as making available this information to the robot's controller application (EPFL), still through network.

The video analysis itself can be freely based on methods described in the numerous research papers found in the literature, making possible to choose from one method or another according to the kind of CPU print we can allow for the application.

New video tracking methods may even be included later, making possible to have a set of networked video tracking applications running a different video analysis algorithm each.

Posted by fabric | ch at 16:13

Webcam sphere (spycam sphere)

-

In (distant) relation to the webcams objects & mirrors by Aude for the *Variable environment/* project, this is a monitoring sphere that track all the space around itself. It is made out of traditional video cameras and b&w screen displays, it can be apparently rolled. No software behind it. Project by Jonathan Schipper.

See the video HERE.

Posted by patrick keller at 17:17

AR & Mirror (kind of)

-

An 'AR' project by Adam Somlai-Fisher where they seem to project images (Augmented Reality with brain images) on a mirror or something looking like a mirror. Again here, something to loook at for our project as we wanted to have some kind of screen based mirror or projection based mirror for augmented reality situations.

Check out the video HERE.

Posted by patrick keller at 17:16

Tracking camera & lights

-

Camera tracking (vision) at the scale of a huge hall transmitted to a grid of rotating robotic neon lights. Behaviors of the lights and variability of the overall pattern depend on the number of users being tracked.

Video HERE. Project by Raphael-Lozano Hemmer

Posted by patrick keller at 17:14

Fonction unique

Posted by |BRAM| at 11:40

"Get Info About Me"

Posted by |BRAM| at 10:23

"Fog Me"

Posted by |BRAM| at 9:57