fabric | ch

fabric | ch, a swiss based "architecture & research" studio who's works link world wide networks and local space, materiality and immateriality, ... (and that is therefore specialized in the creation of experimental spaces and architectures in close connections to emerging technologies, information and communication technologies in particular) has joined the project since its start back in June 2005 and worked around it up to now.

Their work was mainly to develop spatial propositions, produce a spatial tracking software for Workshop_4 and will work on the extensions of the AR Toolkit software, linking it with some of their open source spatial softwares (Rhizoreality.mu) as well as with the development done by the EPFL.

-

fabric | ch is composed of two EPFL's architects (Christophe Guignard & Patrick Keller), a telecommunication engineer (Stéphane Carion) and a computer scientist (Dr. Christian Babski).

Their works have been presented or exhibited mainly in Europe and Americas (Siggraph, ISEA, File Rio & Sao Paulo, Centre Culturel Suisse - Paris, PixelAche, ART | Basel, DIS Boston, Architectural Association - London, Festival Lyon Lumières, MAMCO, EPFL, ICA - London, etc.)

-

-

Posted by patrick keller at 15:26

EPFL - SWIS - Swarm-Intelligent Systems Group

The Swarm-Intelligent Systems Group of the EPFL (Swiss Federal Institute of Technology), Prof. Alcherio Martinoli and Post-doctorate assistant Julien Nembrini have joined the Variable environment/ project and worked around Workshop#4 between June and December 2006.

Prof. Martinoli's laboratory belongs to the School of Computer and Communication Sciences, Institute of Communication Systems of the EPFL.

-

SWIS laboratory is a member of the Mobile Information & Communication Systems, a National Center of Competences in Research.

-

The area of interest of the laboratory is in swarm-intelligent collective robotics.

Within the context of this collaboration, we will work with the E-PUCK (see img below) platform. The goal is to develope a human - swarm bots collaboration with a minimal spatial & lighting function for the bots.

-

Posted by patrick keller at 15:10

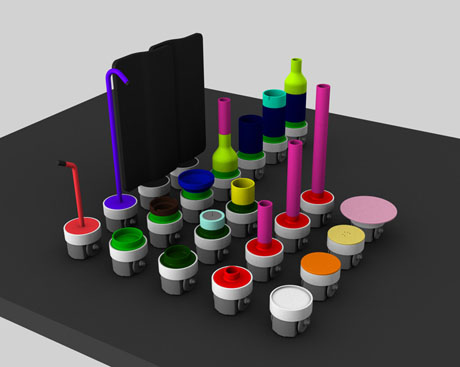

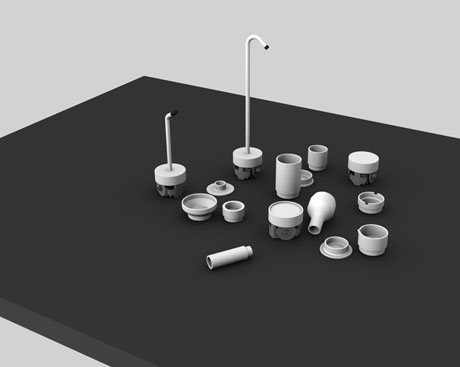

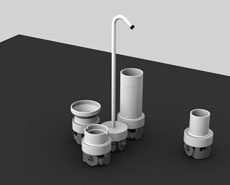

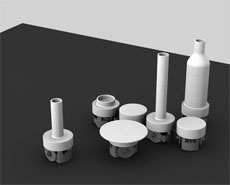

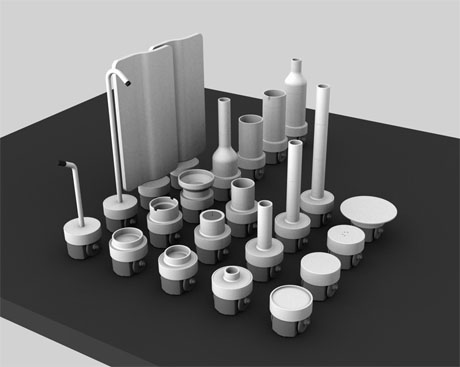

Rolling microfunctions / A scenario

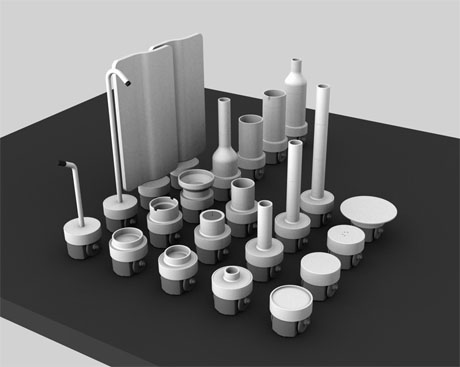

ROLLING MICRO-FUNCTIONS (for SOHOs)

Variable environment's Workshop by fabric | ch and SWIS-EPFL.

Design brief & AD by fabric | ch

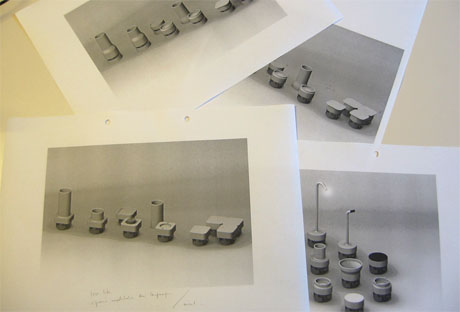

Object design by Laurent Soldini & Julien Ayer

-

What would happen if you were living, inviting your friend(s) and working into one single room? In a space that would therefore nearly naturally evolve between very private functions and public ones, where the shape of space wouldn’t change but where functions slowly migrate from one into another without the “user(s)” really even noticing it (the status of space would be movement).

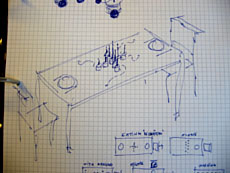

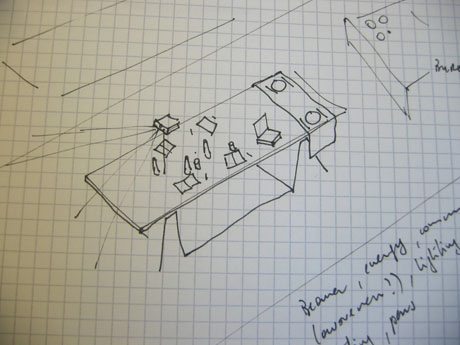

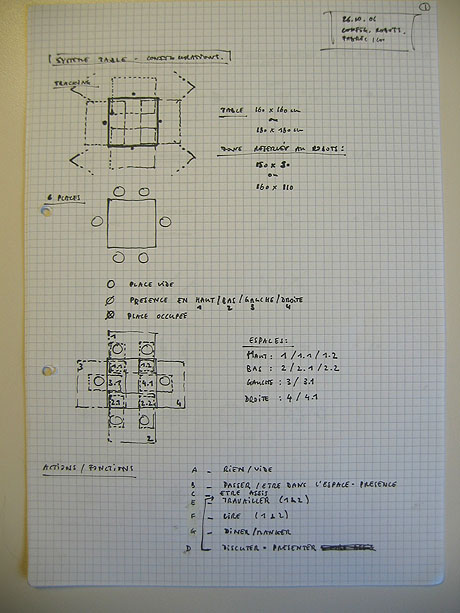

“Rolling micro-functions” is an attempt to illustrate and develop working propositions around this prospective theme at a micro-space scale (a long table): our scenario for the workshop is one room equipped with a long table and several chairs (it could ideally be something looking like the Bouroullec’s Joyn table from Vitra or even a hybrid bed-table –see link 1 or link 2–, had it been further developed) where those evolving functions would occur (working, eating, relaxing and even sleeping).

In this room, a tracking system that give information about user(s) activities/configurations will be necessary as well as a set of robotic micro-functions that can reconfigure themselves according to those captured user(s)’ information, so as maybe other information or invisible layers as well (networked information, digital world, stock quotes, dynamic data, electromagnetic fields, live data from air and biological tracking or from weather stations, news, etc.)

While today most of our architectural spaces are structured aggregation of mono-functional separate rooms, usually partitioned (a room for sleeping, a room for cooking, a room for watching TV or eating, a room for bathing, etc.), which is a functional approach inherited from the modern period (that consumes a lot of space and that btw also contributes to energy consumption problems), this project tries to suggest a different and speculative approach with the densification (urban room?), multiplication and variation of functions within one space and therefore its evolutionary and continuous nature over time (from private to public and return, etc.).

-

Note1: this workshop took place during several months between July and November 2006. As it implied software developments as well as design proposals, it was necessary to take more time than for some of the other workshops (we wanted with this project to reach “working demo” level as well as “fiction demo”), even if it was not full-time work.

As one part of the necessary technology pre-exists to the workshop (E-Puck robots for education) and has some clear constraints (size, shape and topology, movement, computing capacities, type of sensors, etc.), the attitude here is rather to illustrate certain principles at small scale about our understanding of contemporary space (continuous, layered, variable rather than binary, partitioned and fixed) and to propose speculative artifacts for it. We also limited the information sent to the robots to local, user and camera based tracking.

We’ll therefore work with micro-spaces and micro-functions here that are not so convincing as a potential future product (who would pay 600$ for a rolling ashtray?). But this was not really the purpose in this context, we wanted rather to experiment around (micro-)architectural agent/behaviors and the results could/should be extrapolated to bigger scales.

-

Note2: the project implies the development of a software (“tracking of spatial configuration”) to pass the information about spatial usage to the “E-Puck” robots. The development of this architectural software (webcam based) has been undertaken by fabric | ch.

The robots are also tracked by another camera and its software that is under development at the EPFL.

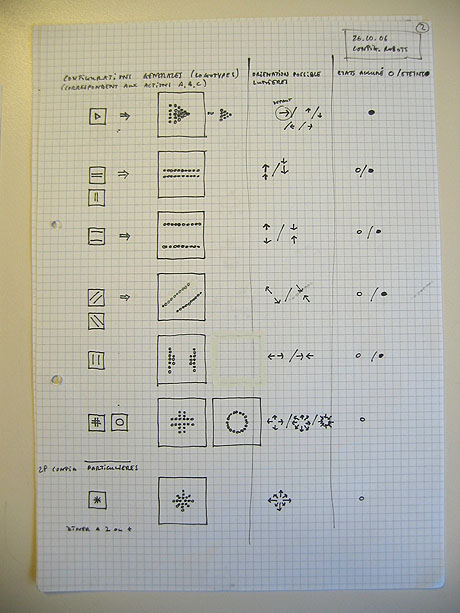

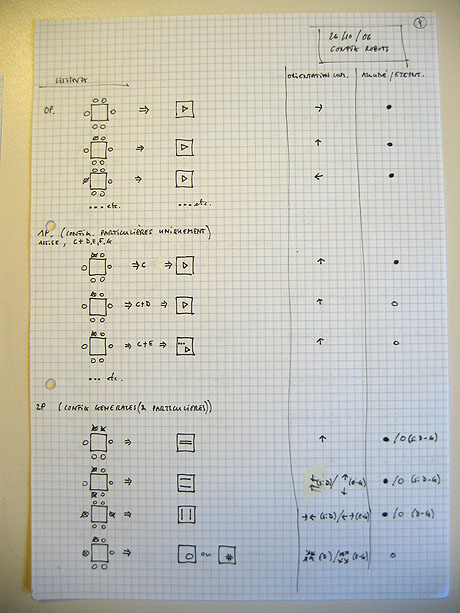

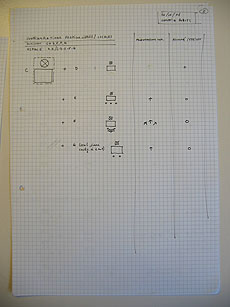

Finally, a set of rules (behaviors) for the positioning of the robots according to user(s) configurations is also necessary. It acts as a kind of grouping language for the robots. The overall system resembles therefore something in between a kind of “dot-matrix printer for micro-functions” and an "autonomous system" (swarm-intelligent). The idea is not that the robots “brings you an ashtray when you need one”, which would be uninteresting, but rather that they illustrate through functional propositions and configurations their understanding of what is going on in the room.

-

Posted by patrick keller at 14:25

E-Puck technology

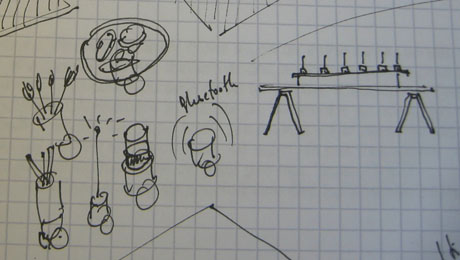

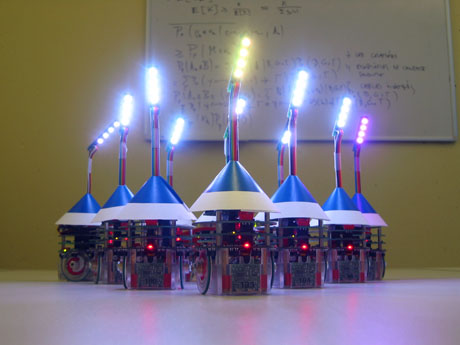

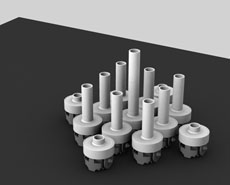

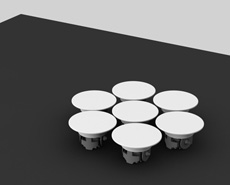

The E-Puck is an existing technology developed at the EPFL for education purposes. It has limited built-in sensors, displacement and communication capacities. It can be extended like a sandwich can have more layers... In our case, for our working demo and algorithm development, we will build a "lighting" robot only.

Has you can see on the image below, lots of epucks have been built already so to be able to implement "intelligent" & swarm robotic behaviors.

-

Link to E-Puck resources online.

-

Posted by patrick keller at 14:23

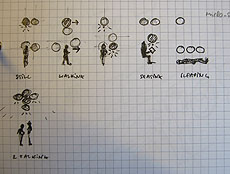

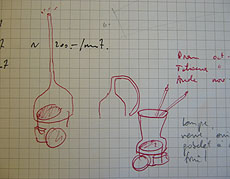

Other related works & sketches

-

We first had in mind to work with flying flocks of robots (see sketches above from last spring 2006), still with the same kind of functional/spatial variability in mind. This in part because Prof. Alcherio Martinoli and Dr. Julien Nembrini already worked on a similar project.

It would have been probably more convincing as an architectural scale. But it revealed to be far too expensive and out of our time frame perspective. That's why we switched on the E-Puck solution. The two sketches shown here were linked to that first approach.

At the time of the project, the Mascarillons project and the Flying flock (to compare to the Instant city project of Archigram, again!) was something we had saw. Since then, Ruairi Glynn and the Bartlett School of Architecture did a research work in that direction so as the Art Center Pasadena. We were also interested in the LIS-EPFL's blimp because it's using vision to fly, it therefore needs a pretty straight visual environment...

Posted by patrick keller at 14:15

Workshop#4 result: VTSC (Visual Tracking of Spatial Configuration)

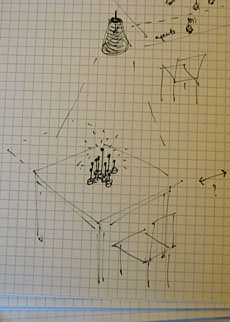

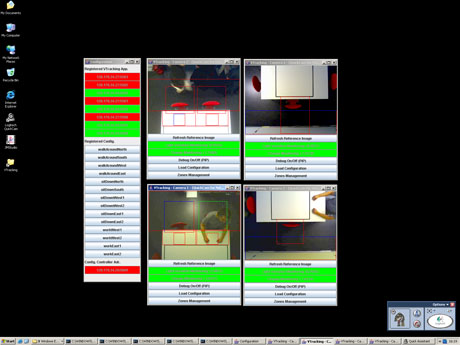

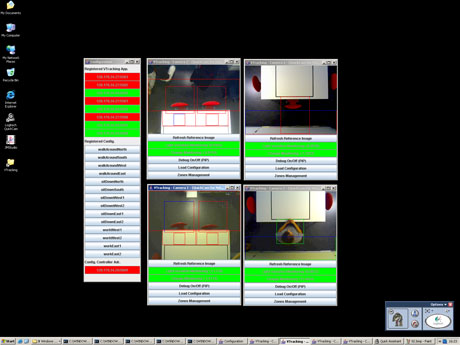

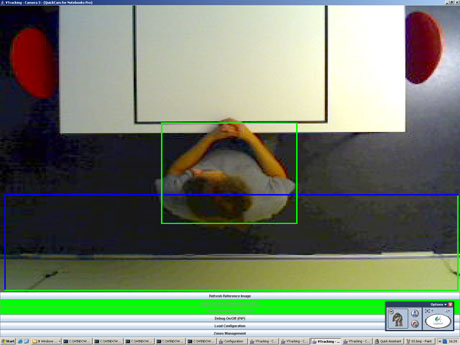

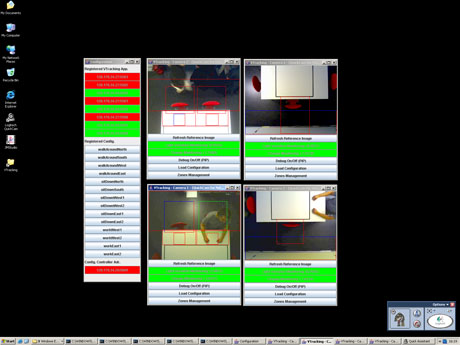

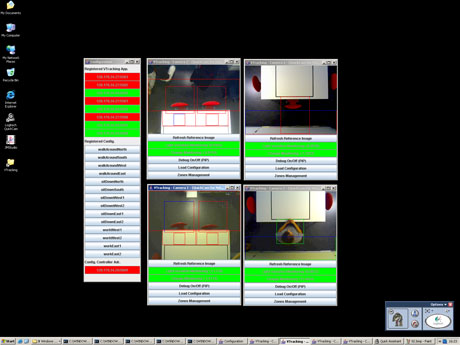

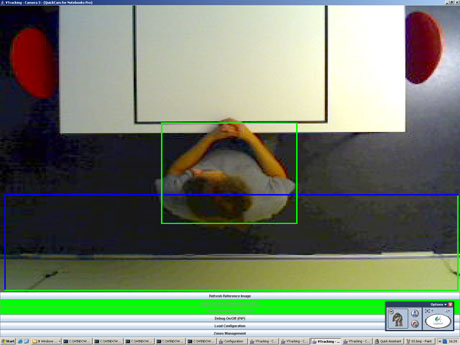

Visual Tracking of Spatial Configuration Software was developed by Christian Babski from fabric | ch for Workshop#4. It is a server/client based tracking software where you can add any number of tracking camera and computers (4 camera per computer).

The principle is that you can draw any number of "zones" in any camera view and that these zones can then be occupied (by a user, an object, etc.) or not (on/off status). Combining 2 camera that look at the same part of space from different points of view (top and side for example) let you know if this part of space is occupied of course, but also if a person is standing or sitting in the zone for example. The server centralises the on/off status of the different camera's zones, match it to a configuration file (i.e. this zone + this zone "on" equal "a user is sitting on this chair") and passes this information to other applications (in our case the robots, tanks to bluetooth communication).

-

You can follow the development of the architectural software in this blog:

_ VTSC - Tech. Review

_ Exemple d'utilisation du Tracking Vidéo (VTSC)

_ Video Tracking System of Spatial Configurations

_ VTSC System - Testing

_ VTSC in use

Below, a couple of VTSC's screenshots. Camera views are from the four ceiling camera. Zone in red = "off", green = "on"...

Posted by patrick keller at 14:07